A British R&D unit today unveiled a futuristic vision of “quantitative safety guarantees” for AI.

The Advanced Research and Invention Agency (ARIA) compares the guarantees to the high safety standards in nuclear power and passenger aviation. In the case of machine learning, the standards involve a probabilistic guarantee that no harm will result from a particular action.

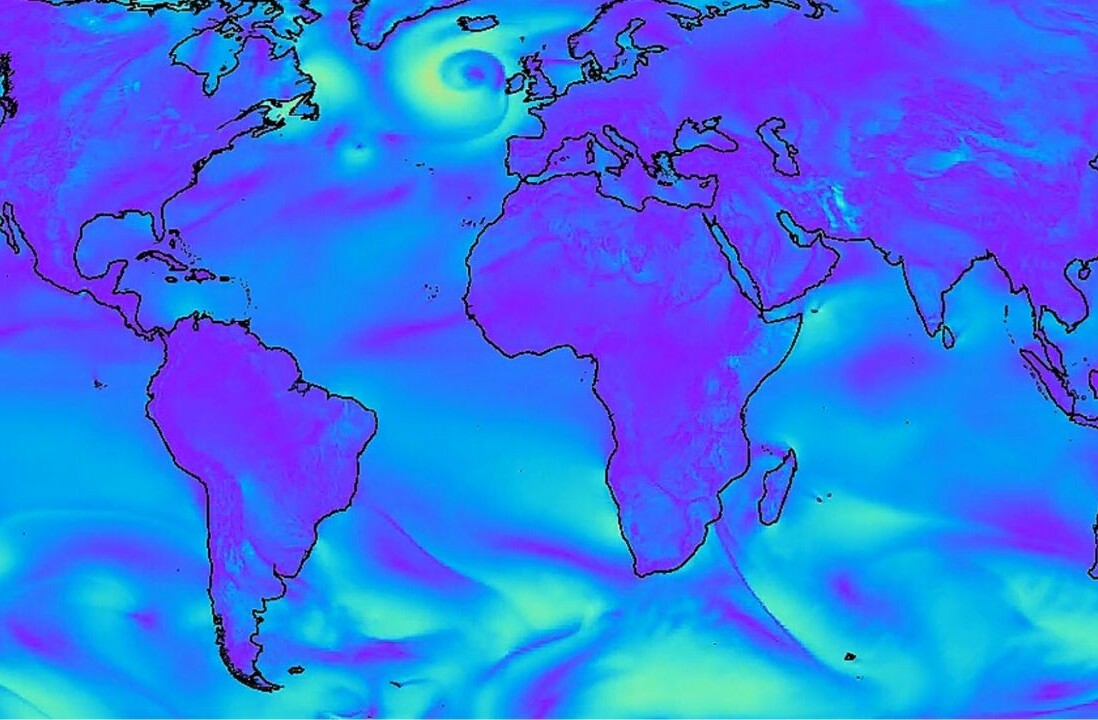

At the core of ARIA’s plan is a “gatekeeper” AI. This digital sentinel will ensure that other AI agents only operate within the guardrails set for a specific application.

ARIA will direct £59 million towards the scheme. By the programme’s end , the agency intends to demonstrate a scalable proof-of-concept in one domain. Suggestions include electricity grid balancing and supply chain management.

If effective, the project could safeguard high-stakes AI applications, such as improving critical infrastructure or optimising clinical trials.

The program is the brainchild of David ‘davidad’ Dalrymple, who co-invented the popular cryptocurrency Filecoin.

Dalrymple has also extensively researched technical AI safety, which sparked his interest in the gatekeeper approach. As the programme director of ARIA, he can now put his theory into practice.

The gatekeeper guarantee

ARIA’s gatekeepers will draw on scientific world models and mathematical proofs. Dalrymple said the concept combines commercial and academic concepts.

“The approaches being explored by big AI companies rely on finite samples and do not provide any guarantees about the behaviour of AI systems at deployment,” he told TNW via email.

“Meanwhile, if we focus too heavily on academic approaches like formal logic, we run the risk of effectively trying to build AI capabilities from scratch.

“The gatekeeper approach gives us the best of both worlds by tuning frontier capabilities as an engine to drive at speed, but along rails of mathematical reasoning.”

This fusion requires deep interdisciplinary collaboration — which is where ARIA comes in.

The British DARPA?

Established last year, ARIA funds “high-risk, high-reward” research. The strategy has attracted comparisons to DARPA, the Pentagon’s “mad science” unit.

Dalrymple has drawn another parallel with DARPA. He compares ARIA’s new project to DARPA’s HACMS program, which created an unhackable quadcopter. The project proved that formal verification can create bug-free software.

“Vulnerabilities can be ruled out, but only with assumptions about the scope and speed of interventions that an attacker can make on the physical embodiment of a system,” Dalrymple said.

His plan builds on an approach endorsed by Yoshua Bengio, a renowned computer scientist. A Turing Award winner, Bengio has also called for “quantitative safety guarantees.” But he’s been disappointed by the progress thus far.

“Unlike methods to build bridges, drugs or nuclear plants, current approaches to train frontier AI systems — the most capable AI systems currently in existence — do not allow us to obtain quantitative safety guarantees of any kind,” Bengio wrote in a blogpost last year.

Dalrymple has a chance to change that. That would also be a huge boost for ARIA, which has attracted scrutiny from politicians.

Some lawmakers have questioned ARIA’s budget. The body has won £800mn in funding over five years — a sizeable sum but a mere fraction of other government research bodies.

ARIA can also point to potential savings on the horizon. One programme it launched last month aims to train AI systems at 0.1% of the current cost.

One of the themes of this year’s TNW Conference is Ren-AI-ssance: The AI-Powered Rebirth. If you want to go deeper into all things artificial intelligence, or simply experience the event (and say hi to our editorial team), we’ve got something special for our loyal readers. Use the code TNWXMEDIA at checkout to get 30% off your business pass, investor pass or startup packages (Bootstrap & Scaleup).

Get the TNW newsletter

Get the most important tech news in your inbox each week.