This week the US government opened a formal investigation into Tesla’s Autopilot driver-assist software due to a series of collisions with emergency vehicles.

Since 2018, Tesla cars driven in Autopilot have crashed into parked first-responder vehicles 11 times, resulting in 17 people being injured and one death. While that sounds serious, I wouldn’t bet on the investigation to lead to real change.

How do you miss an emergency vehicle?!

Before diving into what investigations like these actually achieve, I think it’s safe to say the collisions are hardly a ringing endorsement for automation.

Sure, most of the incidents took place after dark, but the first responder vehicles and their surroundings were all equipped with visibility features like lights, flares, an illuminated arrow board, and road cones. Stuff a human would pick up on in a split second.

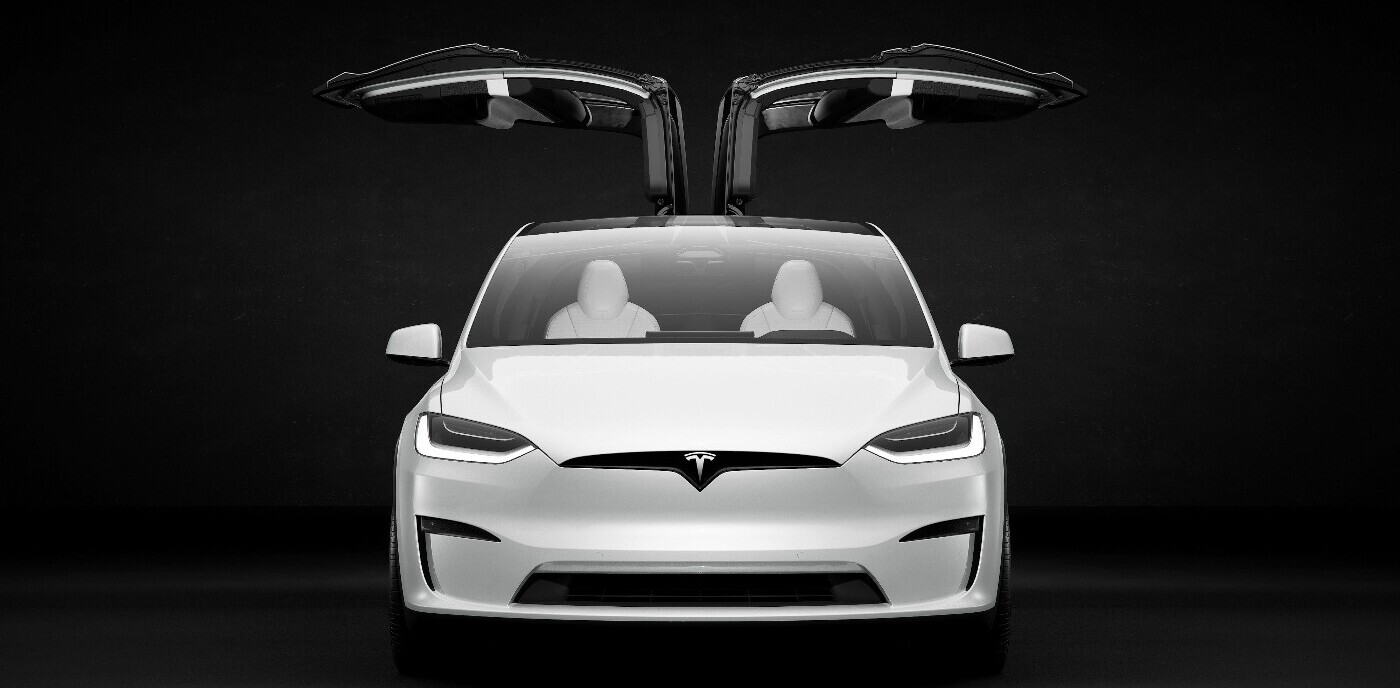

In response, the Office of Defects Investigation at the U.S. National Highway Traffic Safety Administration (NHTSA) and the National Transport Safety Board opened a Preliminary Evaluation of the SAE Level 2 Autopilot in the Tesla Model Year 2014-2021 Models Y, X, S, and 3.

Tesla’s automation

Despite the name of Tesla’s feature, ‘Autopilot’ is actually an Advanced Driver Assistance System (ADAS) — which the maintains the vehicle’s speed and lane centering in situations which the carmakers’ have deemed safe.

That means drivers are still responsible for ‘Object and Event Detection and Response’ (OEDR).

In human-speak, that means you need to identify obstacles in the roadway yourself and react to any weird maneuvers other drivers might do. What the investigation will do is assess the technologies and methods used to monitor, assist, and enforce driver’s engagement during Autopilot operation. But will it be enough to ensure other road users are safe?

How to stop people driving Tesla’s like dicks

A few months ago, NHTSA and National Transportation Safety Board said they were looking into the company following a crash in Texas. A Tesla 2019 Model S crashed into a tree and burst into flames.

One person was found in the front passenger seat, and another in the rear passenger seat of the car. Both men died.

Your research as a private individual is better than professionals @WSJ!

Data logs recovered so far show Autopilot was not enabled & this car did not purchase FSD.

Moreover, standard Autopilot would require lane lines to turn on, which this street did not have.

— Elon Musk (@elonmusk) April 19, 2021

However, there is no evidence the car was in Autopilot, According to Elon Musk on Twitter:

“Data logs recovered so far show Autopilot was not enabled & this car did not purchase FSD.Moreover, standard Autopilot would require lane lines to turn on, which this street did not have.”

That said, some Tesla drivers do use Autopilot to sleep, read, have sex, and sit in the passenger or back seat while they are supposed to be controlling their Tesla’s.

Tesla has implemented various safeguards to combat this — such as dashboard prompts, warnings, speed limits, and annoying alarms — but they need to be better.

For example, a Tesla will emit an alarm if you undo the belt. However, a driver can circumvent this by simply climbing out of the seat and refastening the seatbelt while empty.

So it shouldn’t come as a surprise (people suck) that YouTube is full of videos of people explicitly not paying attention while their Tesla’s are driving in Autopilot.

What can be done?

First of all, Tesla could do its part by keep improving its safety measure — like checking whether there’s sufficient weight in the driver’s seat rather than focusing only on whether the seatbelt is fastened.

Tesla is set up to monitor drivers by detecting force from hands on the steering wheel. The system will issue warnings and eventually shut the car down if it doesn’t detect hands.

However, there are claims the system is easy to fool and can take up to a minute to shut down. Consumer Reports researchers were able to trick a Tesla into driving in Autopilot mode with no-one at the wheel. There’s since been reports of Tesla using a in-car camera to verify that someone is in the driver’s seat.

But when it comes to investigative bodies like the NTSB, it’s a bit tricky.

Investigators can make suggestions to carmakers based on their findings — e.g. NTSB has recommended the NHTSA limit Autopilot’s use and require Tesla to have better systems in place to make drivers pay attention — but they can’t enforce anything.

Case in point, so far the NHTSA has not taken action on any of these recommendations.

The NHTSA does have the power to deem cars defective, and order recalls. But overall, it also seems to be essentially a toothless tiger.

So what do we do about abuses of autopilot features? Not much… until legislation catches up with autonomous vehicle technology to force companies enact more safety measures.

In the meantime though, perhaps we can take solace in the increased investigations into Tesla, as it might signal a more aggressive governmental push towards safety.

Do EVs excite your electrons? Do ebikes get your wheels spinning? Do self-driving cars get you all charged up?

Get the TNW newsletter

Get the most important tech news in your inbox each week.